Small Notes on Machine Learning

So Machine Learning has become one of the hottest topics of today. Programs in the domain of "Convolutional Neural Networks" (CNN) capable of recognizing cats in images are described in an awe-struck tone as a form of "Artificial Intelligence" even though they are essentially simple operations on a massive scale yielding candidates sorted by probability. It is of course difficult not to be captured by the hype, but I still think it is worthwhile remembering some of the problems that are already known at this time.

ImageNet

One of the reasons why Machine Learning has become so widely discussed in the last years, and why it is so highly regarded, are surely the advances on the Image Net contest. More than 14 million images have been hand-annotated as to what objects they contain and are thus readily available to researchers of object recognition software. The results of the contest have thus become a de facto benchmark for machine learning researchers.

For a long time contenders were not able to achieve a "top-5 accuracy" better than ~75%. Before proceeding, let's consider the meaning of this "top-5 accuracy". For this we have to consider that a classifier usually outputs an ordered list of categories ("labels") sorted by the computed probabilities. For the top-5 accuracy it is enough that the "true" category (known through the hand annotation) is amongst the first five of them. So let's consider an example where the classifier outputs for a given picture the list of probabilities "63% cat, 26% panda, 4% tiger, 3% dog, 1% rhinoceros". Even if the picture showed a dog, the answer would still be accurate in the "top-5" sense. One always has to keep this in mind when looking at the near-perfect top-5 accuracy prevailing in this field.

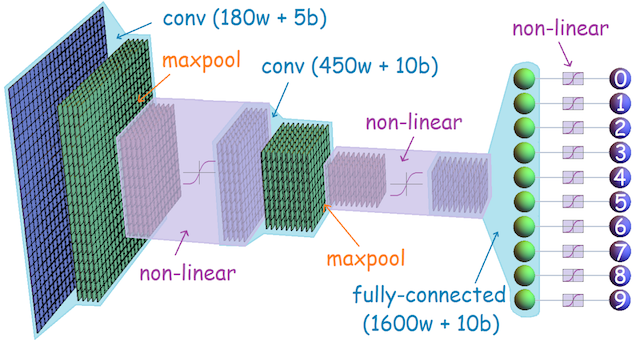

So when AlexNet was able to raise this score by a whopping 10% in 2012, this was only possible by using new approaches to the existing problem. For AlexNet the key of success was a deep Convolutional Neural Network (CNN) coupled to the usage of GPUs (i.e. graphic cards) in the "training phase". This training phase is computationally so expensive that it was simply not feasible running it only on general purpose computers. The realization that the massive parallel nature of the computations needed to render 3D scenes can also successfully be applied to the training phase started the "deep learning revolution".

So modern CNNs like GoogleNet, VGG-16 or ResNet-50 yield results that are so good that we kind of infer that the program somehow has learned the ability to truly recognize the objects in the pictures. Of course as the CNNs essentially are programs with millions of parameters (the so called "weights"), we cannot directly explain how and why a specific set of weights learned by the algorithm solves the given problem. Our natural tendency for Anthropomorphisms then kicks in and attributes "Artificial Intelligence" without much further ado.

Researchers from the University of Tübingen came up with clever methods of more closely testing this "Intelligence Hypothesis". If the programs truly tell cats from dogs, then they should be able to do so even for specially constructed artificial inputs. Human test subjects were used as a control group and incidentally this control group did not reach the results of the CNNs on the original data. The artificial inputs were constructed by image manipulations like black and white filters, outline filters and even textures manipulations.

Surprisingly the CNNs quickly dropped in their accuracy compared to humans and could even be fooled completely for example by overlaying elephant skin texture over a cat picture. The latter was consistently recognized as a "sure elephant".

The study seem to show the CNNs did not really learn to recognize the objects in question, but rather their textures. More research will be needed to better understand the capabilities of CNNs, but at this stage we are reminded that our attribution of intelligence was very much premature.

It is worthwhile to read the whole article How neural nets are really just looking at textures as it includes images for a better understanding and the link to the original paper.

Oracle Behaviour

So the previous paragraph should have reminded us that especially the resulting inference algorithms of ML are really black boxes or "Oracles". They do yield a result but it is not possible to understand the process leading up to it. By definition they work on the data they were trained on and they are validated on another set of well known data but from then on we simply have to have faith that they will work on other inputs in a hopefully sane way.

Things become truly problematic however when it is not only about telling cats from dogs, but for example about providing "objective measures of the likelihood a defendant will commit further crimes". The article New research suggests racial bias in criminal courts may be inevitable shows what real world effects bad training data has.

Another example of how things can go spectacularly wrong is shown in the article Microsoft Created a Twitter Bot to Learn From Users. It Quickly Became a Racist Jerk. Less than 24 hours after the proud unveiling of the AI bot "Tay", Microsoft had to shut down the system and could only hope that this would not become very public. Talking to people I only rarely encounter knowledge of this story, so things seem to have worked in their favour.

But even when we do our best, Google themselves have published a paper reminding us that it will be easy to have quick good results with machine learning. It is however not well understood that next to the well known maintenance problems of software in general, they have ML-specific issues that are rarely talked about. Again it is worthwhile to read the original paper Hidden Technical Debt in Machine Learning Systems in full.

Before deploying ML products there should be at least some evaluation of these factors.

Fooling Machine Learning

Also well known but not discussed as much as the success stories of ML are the possibilities to fool such systems on purpose by so called "Adversarial Examples". It is somewhat ironic that the exact same algorithms used to train the network can also be used to find inputs that will make the network fail in a predicted manner. The article Create your own Adversarial Examples does a very good job of explaining the technique with real code examples.

The previous article also has a short passage about how adversarial examples could be used to inflict physical harm onto people. The hypothetical scenario supposed that it should be possible to physically create "Stop" traffic signs that would look normal to people but not to the steering algorithm of the car. Only yesterday the article Small stickers on the ground trick Tesla autopilot into steering into opposing traffic lane showed something similar, but this time with real Tesla cars and real small physical stickers strategically placed onto the street.

The whole class of problem shows that the "real world" can be a very nasty place for algorithms to work. Of course there are some nice results that can be achieved with ML, but the sheer amount of potential failure is scary. At least for me it is.

Comments

Comments powered by Disqus